The Trolley Problem Goes Viral: How Your Online Morality Quiz Is Training the World's AI

Viral morality quizzes are more than just a distraction. They're creating a massive dataset on human ethics that is shaping the future of AI decision-making.

The Lede: This Isn't About You. It's About the Code.

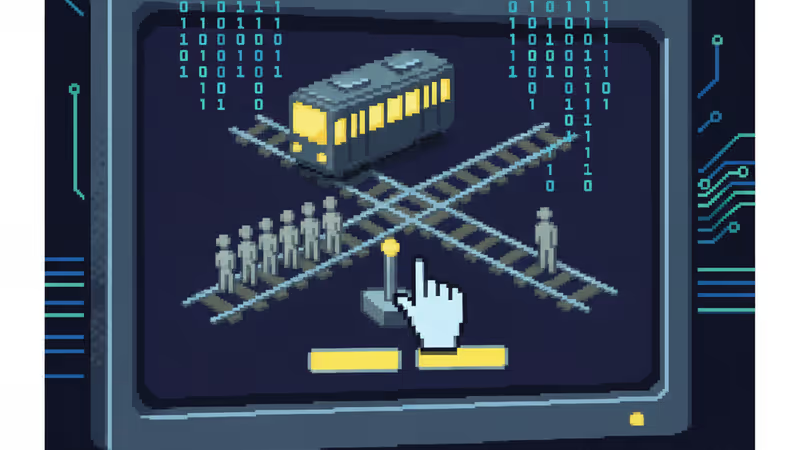

You’ve seen them in your feed: viral quizzes posing thorny moral dilemmas. Save the brilliant scientist or the young student? Lie for your child or uphold the truth? While they may seem like trivial entertainment, they are, in fact, the largest, most chaotic, real-time experiments in human ethics ever conducted. For leaders in the tech and business world, this isn't a distraction; it's a live look at the single greatest challenge in deploying autonomous systems: codifying a universal moral compass. The aggregated responses to these seemingly simple questions are a raw, unfiltered dataset for the very ethical frameworks you will soon be embedding into your AI products, from self-driving fleets to automated HR systems.

Why It Matters: From Clicks to Code

The core issue for any organization deploying AI is the problem of edge cases—the unpredictable situations where an algorithm must make a value judgment. The dilemmas presented in these quizzes are precisely that: a collection of high-stakes, ambiguous edge cases. The 'wisdom of the crowd' generated by these platforms has profound second-order effects:

- De-Risking AI Deployment: Understanding public moral sentiment on a global scale can preemptively identify potential PR disasters. An AI that makes a decision aligned with the 70-year-old scientist might be 'logically' sound but could trigger massive public backlash if sentiment favors the 19-year-old student.

- Product Development Roadmaps: This data highlights the areas where human values are most divergent and contentious. This is a direct signal to product teams about where to prioritize human-in-the-loop systems versus full autonomy.

- Regulatory and Legal Precedent: In the absence of clear laws governing AI decisions, public consensus often becomes the de facto standard. These datasets, however unscientific, are shaping the societal expectations that will eventually become legal frameworks. Ignoring them is like ignoring a focus group of a billion people.

The Analysis: The Moral Machine, Unleashed

This phenomenon is the mass-market, gamified evolution of academic experiments like MIT’s “Moral Machine.” That project specifically crowdsourced preferences for how autonomous vehicles should handle unavoidable accidents—a sophisticated, global Trolley Problem. It revealed deep cultural divides; for example, participants from collectivist cultures tended to prioritize saving the elderly, while those from individualistic cultures often favored the young.

Today's viral quizzes, while less structured, operate on the same principle but at a far greater scale and with a broader set of dilemmas. They move beyond the self-driving car and into the messy realities of professional ethics (do you report your own mistake?), personal loyalty (do you spy for a friend?), and resource allocation (who gets the organ transplant?). This isn’t a flaw; it's the point. It demonstrates that a single, unified ethical theory (like pure utilitarianism) is brittle and fails to capture the contextual, emotional, and cultural nuances of human decision-making. A competing AI platform that grasps this nuance will have a significant competitive advantage in user trust and adoption.

PRISM Insight: The Rise of 'Ethics-as-a-Service'

The immense challenge of translating this messy human data into machine-readable logic creates a new and critical market: Ethics-as-a-Service (EaaS). We anticipate a surge in investment toward platforms that offer AI ethics frameworks, simulation environments, and auditing tools. These companies will not sell a single 'correct' answer. Instead, they will provide systems that allow an AI to:

- Model multiple ethical frameworks (e.g., utilitarian, deontological, virtue ethics).

- Weight decisions based on regional, cultural, and user-defined values.

- Provide a transparent, explainable log of its ethical calculus (XAI).

Investing in pure AI capability is no longer enough. The next unicorns will be the companies that solve the governance and alignment problem, making AI not just powerful, but trustworthy and defensible. The raw sentiment data from these viral quizzes is the market research indicating precisely where these EaaS tools are needed most.

PRISM's Take: The Coming Clash of Codified Values

These viral dilemmas reveal the uncomfortable truth: there is no global consensus on what is 'right'. The critical strategic question for any global tech company is no longer, "Can our AI make a moral choice?" but rather, "Whose morality will our AI choose?" Will it be the morality of Silicon Valley engineers, a UN charter, or the aggregated, chaotic, and deeply human sentiment reflected in a billion clicks on a quiz? The winner in the next decade of AI won't be the one with the fastest algorithm, but the one with the most sophisticated and adaptable ethical architecture. The entertainment of the masses is, quite literally, programming the machines of the future. The time to build your ethical playbook was yesterday.

関連記事

東京・西東京市で起きた母子4人死亡の無理心中事件を深掘り。背景にある育児の孤立、社会の課題を分析し、テクノロジーによる解決策と今後の展望を探ります。

『ボストン・ブルー』中間シーズン最終回の衝撃展開を徹底分析。ローナン釈放が意味するもの、シルバー家の分裂、そして後半シーズンへの伏線を専門家が考察します。

ジャスティン&ヘイリー・ビーバー夫妻の近況から、Rhode Skinの成功に見る新時代のセレブブランド戦略を分析。次世代のビジネスモデルを読み解きます。

気象専門家が警告する2025年米国の年末旅行。AIを活用した最新の天気予報で、危険地帯を避け、安全な旅を実現する新常識を解説します。