Google's New Bet: FunctionGemma Is a Tiny AI That Puts a 'Traffic Controller' on Your Phone

Google has released FunctionGemma, a 270M-parameter Small Language Model for on-device function calling. Discover how this edge AI model offers a privacy-first, low-latency, cost-effective alternative to cloud-based LLMs.

LEAD: While the industry chases trillion-parameter scale in the cloud, Google just made a sharp strategic pivot. The company has released FunctionGemma, a tiny-but-mighty 270-million parameter AI model designed to run locally on phones and browsers, bypassing the cloud for one of the most critical bottlenecks in app development: reliable execution.

Unlike general-purpose chatbots, FunctionGemma is engineered for a single utility: translating natural language commands into structured code that apps can actually execute. It's Google DeepMind's bet on "Small Language Models" (SLMs) as the new frontier, offering developers a privacy-first, low-latency "router" that can handle complex logic on-device.

FunctionGemma is available immediately for download on Hugging Face and Kaggle and can be seen in action in the Google AI Edge Gallery app on the Google Play Store.

The Performance Leap: From 58% to 85% Accuracy

At its core, FunctionGemma addresses the "execution gap." Standard LLMs excel at conversation but often fail to reliably trigger software actions on resource-constrained devices.

According to Google’s internal "Mobile Actions" evaluation, a generic small model achieves only 58% baseline accuracy for function-calling tasks. Once fine-tuned, FunctionGemma’s accuracy jumps to 85%—a success rate comparable to models many times its size. This allows it to parse complex arguments, like specific grid coordinates in a game, not just simple on/off commands.

Google is providing developers a full "recipe" for success, including:

- The Model: A 270M parameter transformer trained on 6 trillion tokens.

- Training Data: A "Mobile Actions" dataset to help developers train their own agents.

- Ecosystem Support: Compatibility with Hugging Face Transformers, Keras, Unsloth, and NVIDIA NeMo libraries.

Omar Sanseviero, Developer Experience Lead at Hugging Face, noted on X the model is "designed to be specialized for your own tasks" and can run in "your phone, browser or other devices."

A New Playbook for Production AI

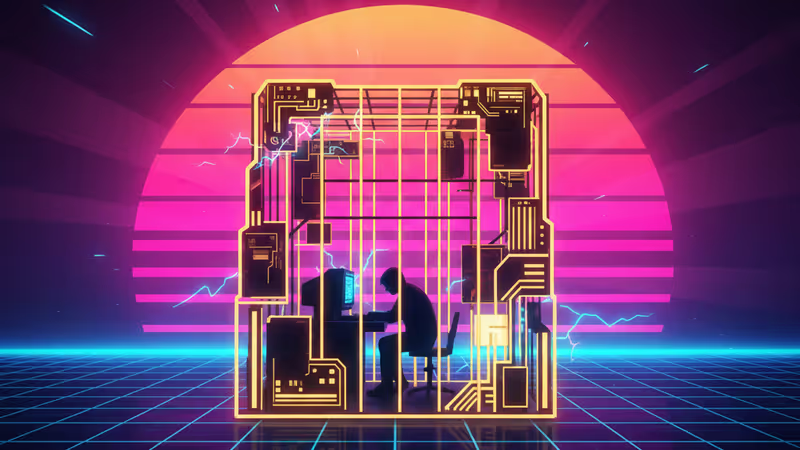

For enterprise developers, FunctionGemma signals a move away from monolithic AI systems toward compound, hybrid architectures. Instead of routing every minor request to a massive cloud model like GPT-4, builders can deploy FunctionGemma as an intelligent "traffic controller" at the edge.

- The "Traffic Controller" Architecture: FunctionGemma acts as the first line of defense on a user's device, instantly handling high-frequency commands like navigation or media control. If a request requires deep reasoning, the model identifies that need and routes it to a larger cloud model. This hybrid approach drastically reduces cloud inference costs and latency.

- Deterministic Reliability over Creative Chaos: Enterprises need their banking apps to be accurate, not creative. The jump to 85% accuracy confirms that specialization beats size for production-grade reliability—a non-negotiable for enterprise deployment.

- Privacy-First Compliance by Design: For sectors like healthcare and finance, sending data to the cloud is a compliance risk. Because FunctionGemma runs on-device, sensitive data like PII or proprietary commands never leave the local network.

PRISM Insight: FunctionGemma isn't just another model; it's a strategic move to decentralize AI. The industry is shifting from a monolithic, cloud-first architecture to a compound system where specialized edge models act as intelligent gatekeepers. This 'small AI' at the edge, 'big AI' in the cloud pattern will define the next wave of cost-effective, private, and responsive applications, turning the on-device processor into the primary AI brain for everyday interactions.

Licensing: Open-ish With Guardrails

FunctionGemma is released under Google's custom Gemma Terms of Use, a critical distinction from standard open-source licenses like MIT or Apache 2.0. While Google calls it an "open model," it isn't strictly "Open Source" by the OSI definition.

The license allows free commercial use, redistribution, and modification but includes specific Usage Restrictions (e.g., prohibiting use for generating malware). For most startups, it’s permissive enough to build commercial products. However, teams requiring strict copyleft freedom should review the specific clauses carefully.

関連記事

GoogleがシンガポールにAIハブを設立。この巨額投資がAPAC地域のテックキャリア、AI技術トレンド、そしてグローバル人材市場に与える影響を深掘りします。未来のキャリアパスと投資機会を探る。

GoogleのAI戦略の中心人物、ジョシュ・ウッドワード氏がGeminiアプリの責任者に就任。AI競争激化の中、Google Labs兼任の彼のリーダーシップがGoogleの未来をどう形作るか、その重要性と業界への影響をPRISMが深掘り。

GoogleがGeminiによるアシスタント完全移行を2026年へ延期。単なる遅延ではなく、AIの実用化が直面する技術的課題と業界の現実を映し出す。

Googleが裁判所命令でAndroidの代替課金を開放。しかし、高額な「代替手数料」を課す新制度は、開発者にとって真の自由を意味するのか?その深層と業界への影響を分析。