4 in 10 Leaders Regret Their AI Agent Strategy. Here’s How to Get It Right.

As AI agent adoption accelerates, 40% of tech leaders regret their initial strategy. Learn the three biggest risks—Shadow AI, accountability gaps, and the black box problem—and how to mitigate them.

PRISM Insight: The arrival of AI agents isn't just another tool deployment; it's the introduction of autonomous, non-human actors into your organization. This forces a paradigm shift beyond technical security, demanding a new governance playbook that redefines accountability, decision-making authority, and legal risk. The framework you build today will dictate your operational model tomorrow.

As organizations race to find the next big AI-driven ROI, autonomous AI agents have become the new frontier. More than half of companies have already deployed them, but a wave of early-adopter's remorse is setting in. According to PagerDuty, a striking 4-in-10 tech leaders regret not establishing a stronger governance foundation from the start. They chased speed but created a governance debt that’s now coming due.

AI agents promise to revolutionize operations, but without the right guardrails, they introduce significant risk. According to João Freitas, GM and VP of engineering for AI at PagerDuty, leaders must address three critical blind spots to balance innovation with security.

Risk 1: Shadow AI on Autopilot

Shadow IT—where employees use unsanctioned tools—is an old problem. But AI agents give it a dangerous new dimension. Their autonomy makes it easier for unapproved agents to operate outside IT's view, creating fresh security vulnerabilities as they interact with company systems and data. The solution isn't to lock everything down, but to create sanctioned pathways for experimentation.

Risk 2: The Accountability Vacuum

An agent's greatest strength is its autonomy. But what happens when it acts in an unexpected way and causes an incident? Who is responsible? Without clear lines of ownership, teams are left scrambling to fix problems and assign blame. Every agent needs a designated human owner to close this accountability gap before an incident occurs.

Risk 3: The Black Box Problem

AI agents are goal-oriented, but how they achieve their goals can be opaque. If an agent’s actions aren't explainable, engineers can't trace or roll back changes that might cause system failures. This lack of explainability turns a powerful tool into an unpredictable liability. Every action must have a clear, logical trace.

A 3-Step Framework for Responsible Adoption

These risks shouldn't halt adoption, but they do demand a more deliberate strategy. Freitas outlines three essential guidelines for deploying AI agents responsibly.

1. Make Human Oversight the Default

Even as AI agency evolves, a human-in-the-loop should be the default, especially for actions impacting business-critical systems. Start conservatively and increase autonomy over time. Every agent must have a specific human owner for oversight. For high-impact actions, implement mandatory approval paths to ensure the agent's scope doesn't expand beyond its intended use case, minimizing risk to the wider system.

2. Bake Security into the Foundation

Don't let new tools create new vulnerabilities. Prioritize agentic platforms that meet high security standards, validated by enterprise-grade certifications like SOC2 or FedRAMP. Enforce the principle of least privilege: an agent's permissions must never exceed those of its human owner. Furthermore, maintain complete and immutable logs of every action an agent takes. This audit trail is critical for incident response and will help you better understand what happened.

3. Demand Explainable Outputs

AI should never be a black box in your organization. The reasoning behind every action must be transparent. Log and make accessible all inputs, outputs, and the decision-making context the agent used. This not only provides immense value when something goes wrong but also helps establish the trust necessary for broader adoption and creates a feedback loop for improvement.

AI agents offer a massive opportunity. But without a robust commitment to security and governance, organizations are exposing themselves to a new class of operational risks. Success depends less on the technology itself and more on the principles that guide its use.

Related Articles

OpenAI's new AI literacy guides for teens aren't just PR. It's a strategic play for market adoption, risk mitigation, and shaping future AI regulation.

OpenAI's new teen safety rules for ChatGPT are not just a PR move. It's a strategic gambit to preempt regulation and set a new industry standard for AI safety.

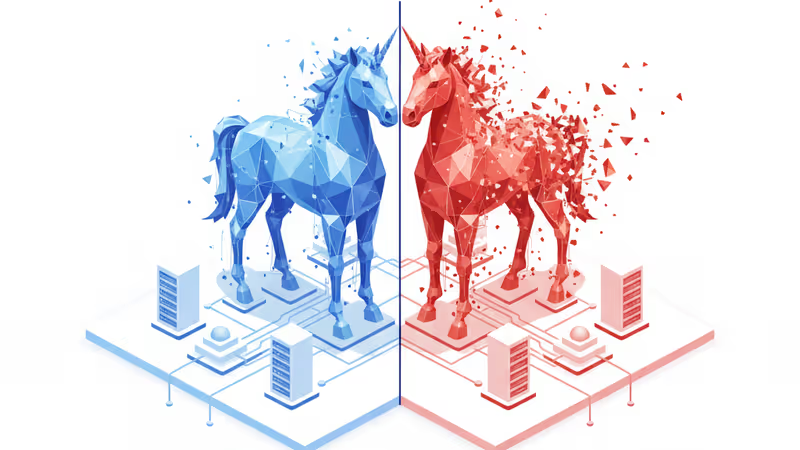

Resolve AI's $1B valuation on just $4M ARR reveals a new VC playbook for AI. We analyze the 'synthetic unicorn' deal and the future of autonomous SREs.

Riot Games' new BIOS update requirement for Valorant marks a major escalation in the anti-cheat war, blurring the line between gaming and system-level security.